Securing Virtual Private Cloud (VPC) Workloads with Wireguard Split-tunneling and Route 53 Private Zones

Amazon Virtual Private Cloud (VPC), allows you to create secure and logically isolated networked environments where you can deploy private …

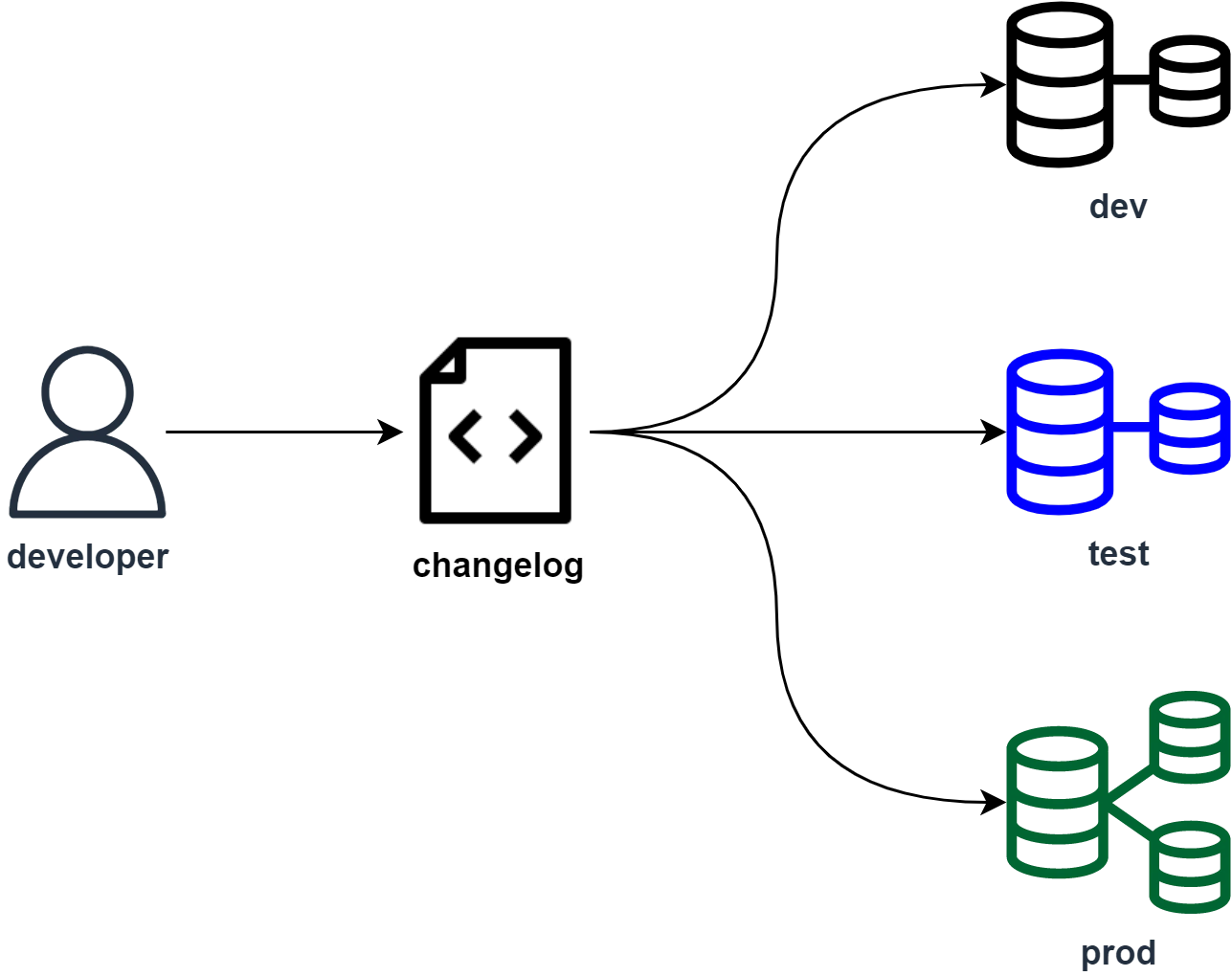

Database versioning allows teams to maintain consistency within environments and encourage collaboration by enabling multiple developers to work on evolving the database schema without conflicts or duplication of work. Much like application versioning, database versioning allows developers to locally make changes, test and allow each other to review these changes much like code before placing it into different deployment environments.

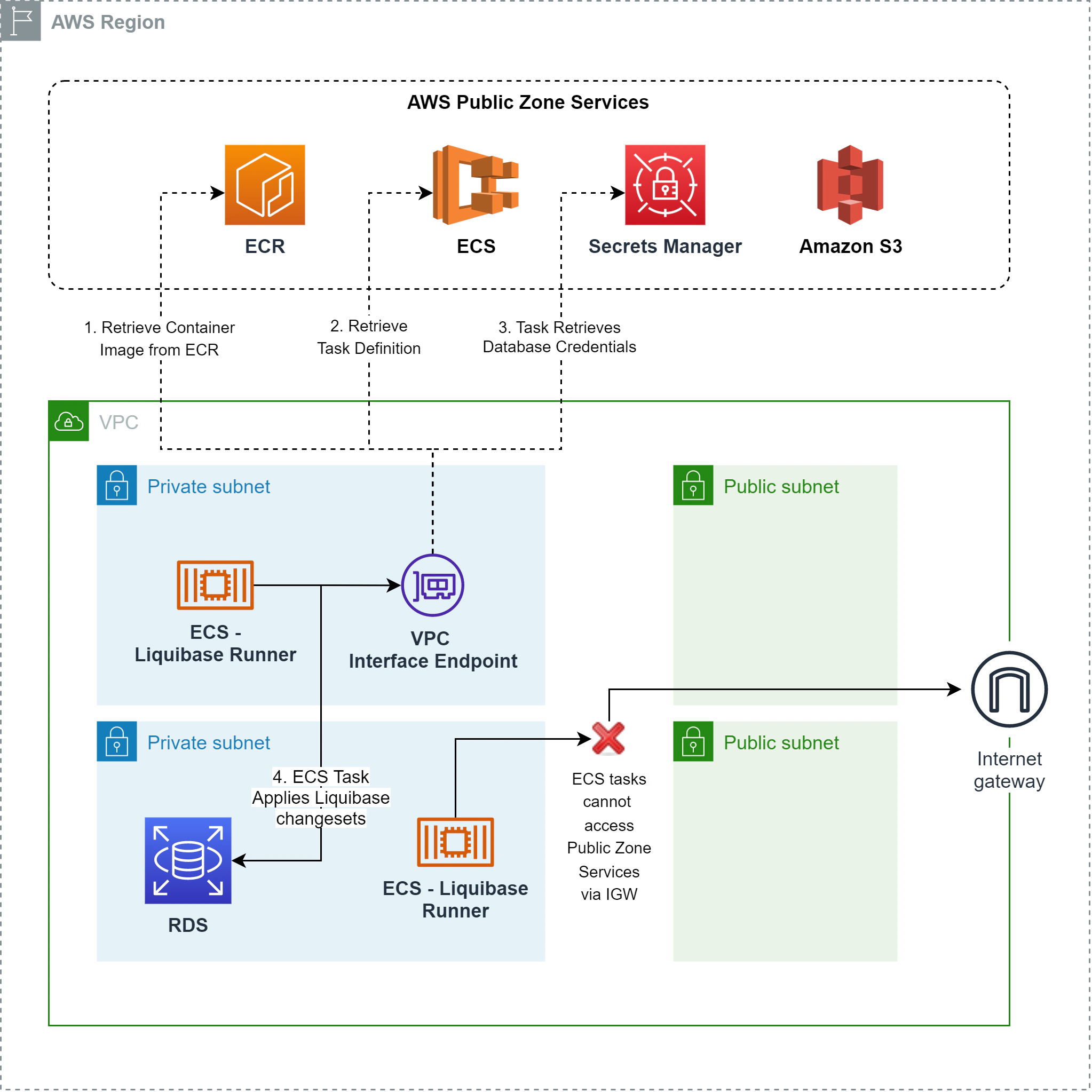

In this post, we’re exploring how a CICD workflow would look like to run secure Liquibase migrations and apply database schema and data changes against a private RDS postgres database. The architecture for this solution is outlined in the diagram below:

Liquibase in a nutshell is one of the most popular database versioning tool. It allows developers to perform database schema and data changes enabling traceability and the ability to rollback from undesired states or failure events. Furthermore, you can apply traditional CI/CD practices and implement branching strategies, testing and deployments and effectively practice “Database-as-code”, treating data and schema as versioned assets just like application code.

For this exercise, I prepared a docker image that has a run script on top of the base liquibase image as a layer. The built container contains the changelog and when run, accepts database credentials as environment variables and executes liquibase migrations against the target database.

We need to create an Elastic Container Registry (ECR) repository first by issuing the command below:

aws ecr create-repository --repository-name lb-migrations-runner --region ap-southeast-1From here, we’ll simply build the docker image and push it to the ECR repo:

docker build -t lb-migrations-runner .

aws ecr get-login-password --region ap-southeast-1 | docker login --username AWS --password-stdin <account-id>.dkr.ecr.ap-southeast-1.amazonaws.com

docker tag lb-migrations-runner:latest <account-id>.dkr.ecr.ap-southeast-1.amazonaws.com/lb-migrations-runner:latest

docker push <account-id>.dkr.ecr.ap-southeast-1.amazonaws.com/lb-migrations-runner:latestAWS Fargate is another serverless compute offering by AWS that allows user to run containerized workloads without managing the virtual machines. With Fargate, you can simply specify a task definition - can be single or multicontainer per task and it simply runs. You have fast horizontal scalability as you can scale your application out by incrementing tasks not EC2 instances.

This way, we don’t have a standby instance that waits idly for liquibase changesets. We are not paying for idle compute and the migration tasks are only run everytime a new changeset is made.

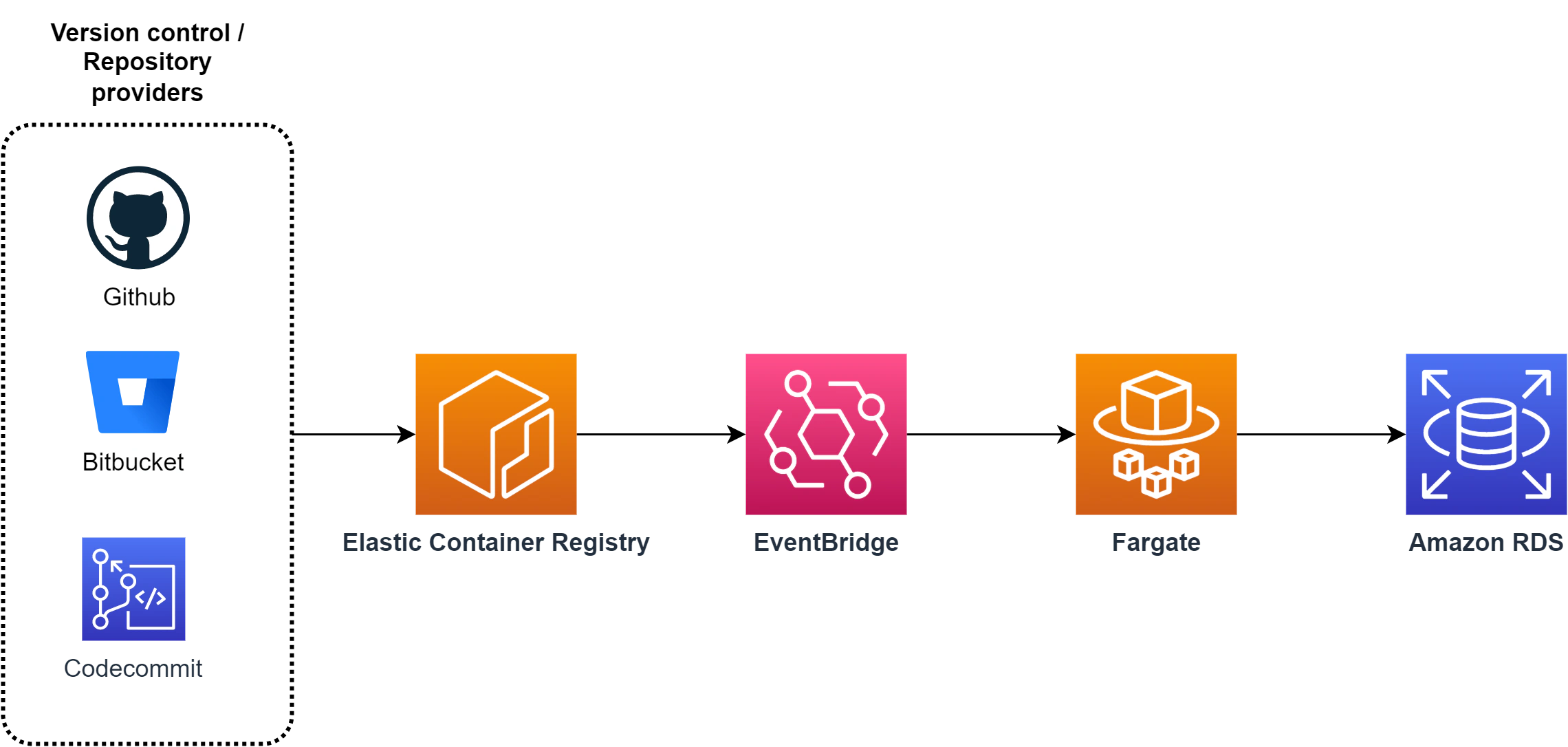

We’re not gonna build a full CI/CD pipeline in this post but we’ll emulate the change process by uploading the docker image directly to the ECR and responding to the event using EventBridge to allow ECS to sort of “subscribe” to the change events. To understand the overview of how the workflow is going to look like, please take a look at the diagram below:

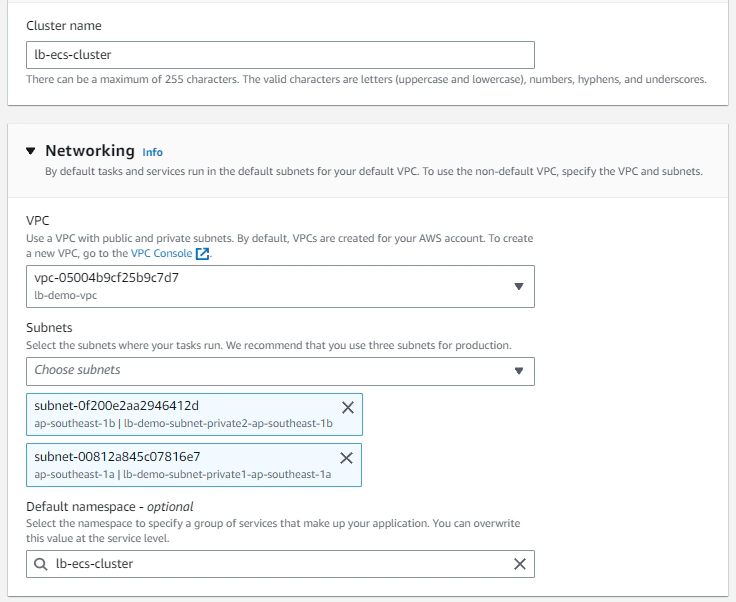

To run containerized workloads in ECS, we need to define an ECS Cluster first.

Note that we want to deploy this cluster in a VPC, specifically specifying our private subnets so that tasks run here will never be allowed to communicate through the public internet. Leaving everything as default without specifying anything in the Infrastructure section will make this cluster a serverless one (only fargate tasks will be allowed to run).

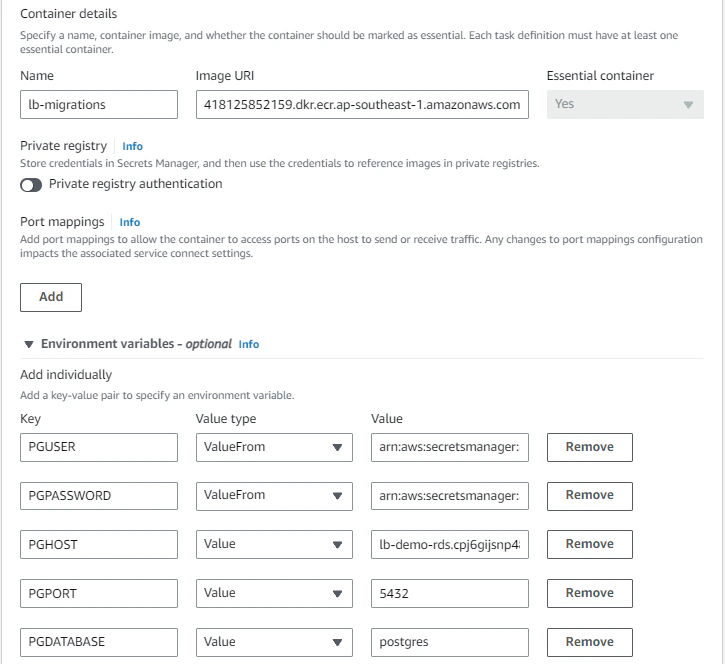

Task definitions are essentially blueprints of how our container application will run, this is where you specify task and container-level CPU, memory and storage allocations. For this example, we can define our task definition like below:

Note that you have to define a task role that contains permissions to access ECR, SSM (if you opt to store configurations in parameter store), Secrets Manager and KMS (to decrypt encrypted secrets). The IAM policy document may look like this:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "ServicePermissions",

"Effect": "Allow",

"Action": [

"secretsmanager:GetSecretValue",

"secretsmanager:DescribeSecret",

"ssm:GetParameters",

"ssm:GetParameter",

"kms:Decrypt"

],

"Resource": [

"arn:aws:ssm:*:<account-id>:parameter/*",

"arn:aws:secretsmanager:*:<account-id>:secret:*",

"arn:aws:kms:ap-southeast-1:<account-id>:key/*"

]

},

{

"Sid": "DefaultPermissions",

"Effect": "Allow",

"Action": [

"ecr:GetAuthorizationToken",

"ecr:BatchCheckLayerAvailability",

"ecr:GetDownloadUrlForLayer",

"ecr:BatchGetImage",

"logs:CreateLogStream",

"logs:PutLogEvents",

"logs:CreateLogGroup"

],

"Resource": "*"

}

]

}Another thing to take note of is that we’re taking database credentials from Secrets Manager and not directly injecting it into container tasks. This means that we’ll have to change the Value type to ValueFrom and adding the arn of our secrets value.

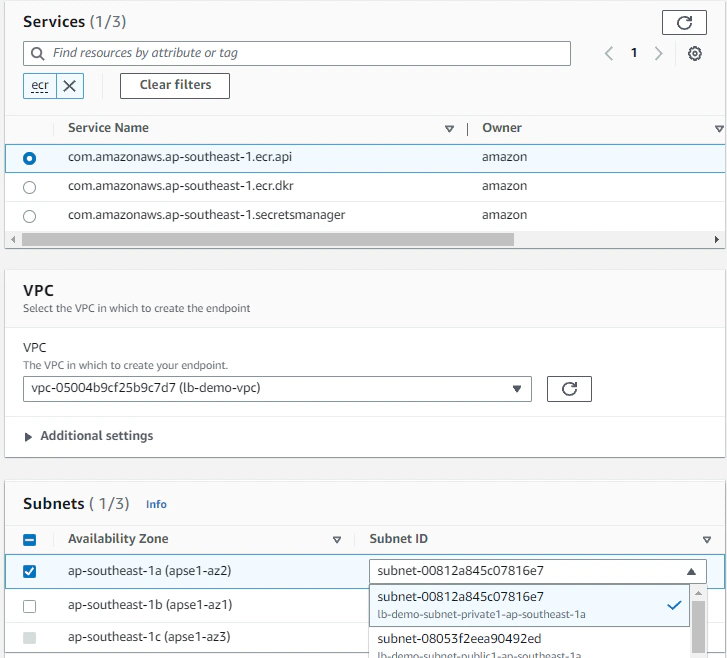

For ECS container tasks to run, they must be able to communicate with ECS service endpoints - this for ECS to orchestrate how the tasks are going to be run and allow ECS container instances or Fargate to retrieve the task definition. Furthermore, it needs to communicate with the ECR service to pull images from container registries.

Since we deployed our cluster in the private subnet, by default our tasks won’t have access to the ECS service endpoint and will not work. We then have serveral options to run our ECS tasks, we can:

While #1 works, this solution requires public internet access and traffic leaving the VPC through the internet gateway. Remember that we do not have any requirement to communicate with a public external service, so access to the internet is completely unnecessary.

Utilizing VPC Endpoints completely creates an airtight method of database access for our liquibase runners ensuring that at no point will ECS traffic go through the internet or outside the VPC. Communication between our containers and RDS remains within the AWS network.

Assuming we already have a VPC, we can provision VPC interface endpoints in VPC section of the AWS console like below:

Some important things to note here :

Now that we’ve successfull setup all the core components, we can simply glue the automation together by capturing ECR repository push events using Amazon EventBridge.

To do this, we can create an Event Rule with an event pattern like below :

{

"source": ["aws.ecr"],

"detail-type": ["ECR Image Action"],

"detail": {

"action-type": ["PUSH"],

"result": ["SUCCESS"],

"repository-name": ["lb-migrations-runner"],

"image-tag": ["latest"]

}

}The event pattern captures successful PUSH events to the lb-migrations-runner ECR repository. This means that everytime our version control and build service (Bitbucket, CodeBuild, Github) successfully pushes a new docker image containing our changesets, an event is captured.

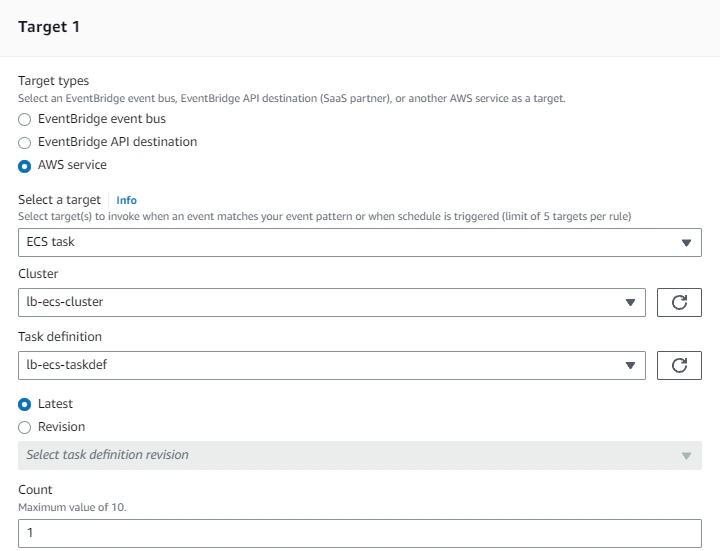

Then we can respond to that event by specifying the event target to be our ECS task:

Note that we are also required to specify the subnets where our tasks are going to be executed, furthermore, our tasks needs to have a security group that is allowed by our RDS security group for database access. We also need to specify in the Compute Options section that our Launch type is FARGATE.

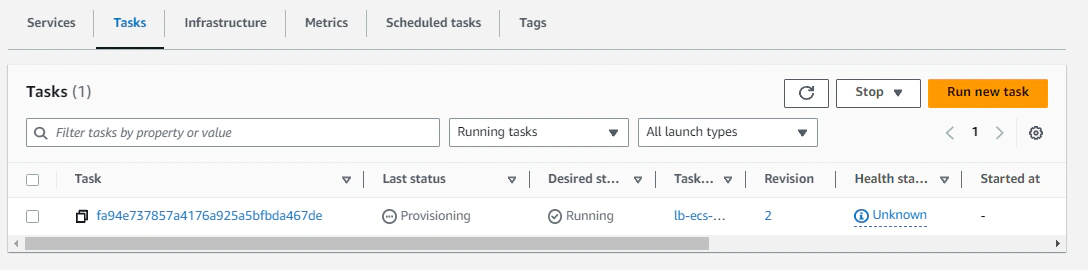

We can try out if the execution is successful, by issuing a push command to the ECR repository.

docker push <account-id>.dkr.ecr.ap-southeast-1.amazonaws.com/lb-migrations-runner:latest

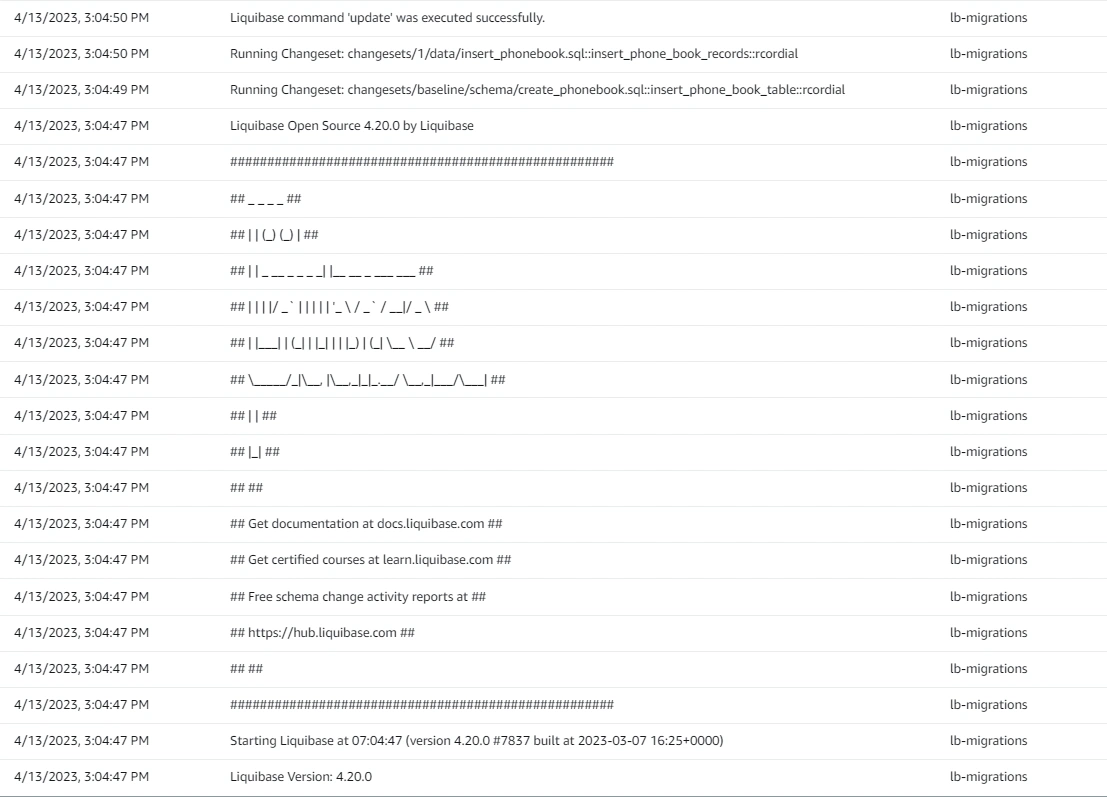

Assuming having followed everything up to this point, we should see the container logs generated by the liquibase executable to display something like below:

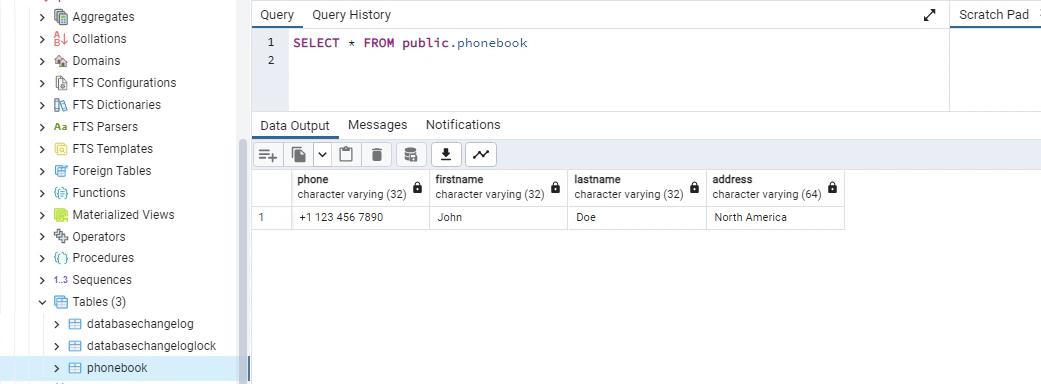

Lastly, we can verify if the tables and the data has been successfully applied by querying our Postgres database:

- We setup a Liquibase project, created a sample changelog/sets.

- Provisioned an ECS cluster and defined a Fargate-type ECS task.

- Setup automatic execution of our Liquibase ECS task by creating an Event Rule to capture ECR new image pushes.

- Utilized VPC endpoints to privately and securely apply the changelog/sets to an RDS PostgreSql database.

Amazon Virtual Private Cloud (VPC), allows you to create secure and logically isolated networked environments where you can deploy private …

Here is a stock photo of Octocat and Groot I found, cause GitHub and S3 (S-Tree, get it? no? okay). S3 stands for Simple Storage Service, as its name …