Setting up a CICD workflow for serverless blogging using GitHub Actions and Amazon S3

Here is a stock photo of Octocat and Groot I found, cause GitHub and S3 (S-Tree, get it? no? okay). S3 stands for Simple Storage Service, as its name …

AWS Lambda at its core, is a serverless compute platform where developers can write small, independent and often single-purpose applications without managing the underlying infrastructure where the application resides. Furthermore, it provides cloud consumers a flexible billing option where no payment is made for “idle” compute yet allowing high availability of the compute resource when required.

One of the use cases for AWS Lambda is running short-lived, routinary computational tasks that runs on schedule or with a given fixed rate, in our case - running database backups.

PostgreSQL is one of the most popular open-source relational databases out there, and while Amazon RDS already provide us with a fully-managed Postgres database solution (point-in time recovery, snapshots, multi-AZ and all rich features etc.), the current price point by which RDS is at does not exactly make it attractive for running small projects as it is often more than 2x the price of what we would get from an EC2 instance of the same compute shape.

Current hourly on-demand pricing table for Singapore (ap-southeast-1) Region:

RDS Instance

| Type | Hourly Rate |

|---|---|

| db.m5.large | 0.247 usd |

| db.m5.xlarge | 0.494 usd |

Equivalent EC2 Instance

| Type | Hourly Rate |

|---|---|

| m5.large | 0.12 usd |

| m5.xlarge | 0.24 usd |

That is not to say that we need to do away with RDS entirely, because oftentimes the cost of data loss for a business might be a whole lot more than the “savings” gained from the compromise of not using it.

So depending on the level of service and reliability required, for small, non mission-critical applications (with a tolerable RTO/RPO), a self-hosted PostgreSQL database on an EC2 instance might suffice. And like any good cloud architect, we need to ensure that we have a Backup/DR plan.

We can create a backup strategy in a variety of ways, we can :

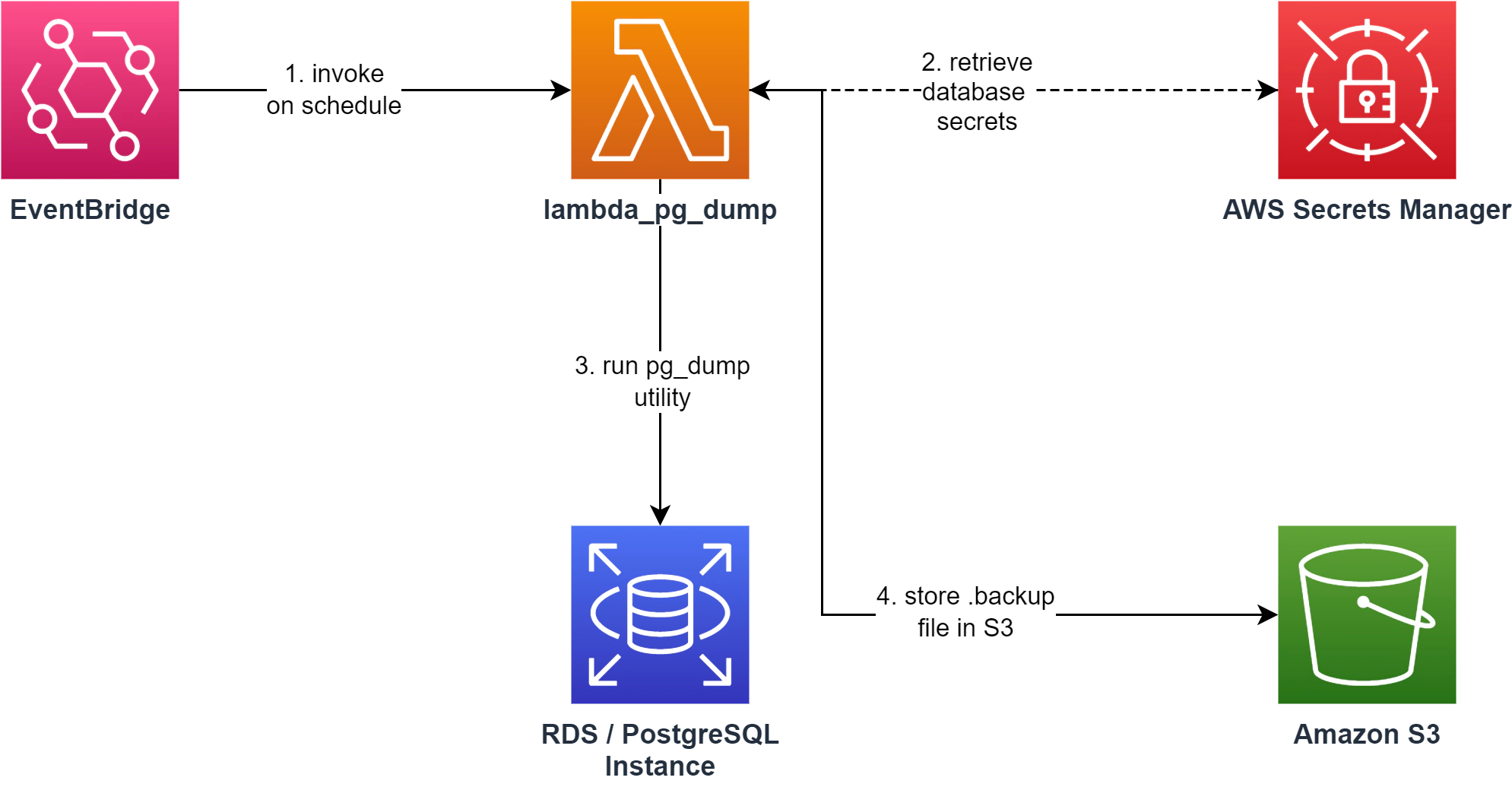

We’ll be implementing a solution outlined in the architecture diagram below:

For this exercise, I prepared a Lambda function that serves as a “wrapper” for compiled native binaries of the pg_dump utility and executes it as a child process.

To create our function, simply clone the repo above, and on the root project directory, run.

npm run buildThis script will simply install dependencies and package the application as a zip file (lambda_pg_dump.zip) that we can upload to AWS Lambda.

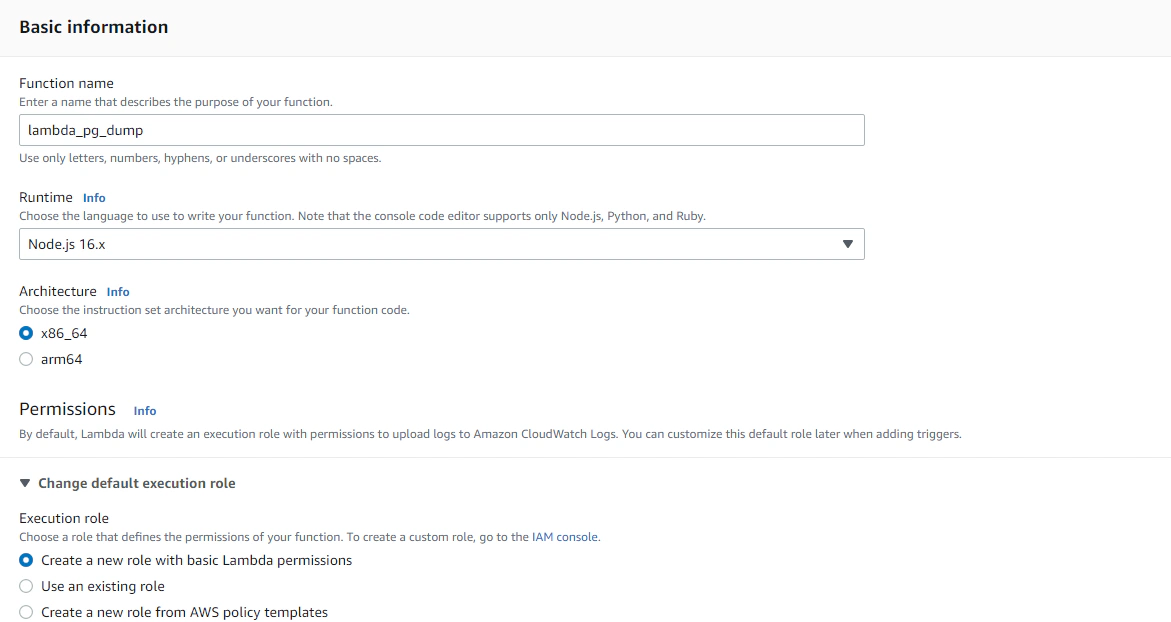

Let’s head over to the AWS console and create our Lambda function. Give our function a name, set the runtime to NodeJS 16.x and we can just allow it to create a new basic role (which we will add more permissions to later on).

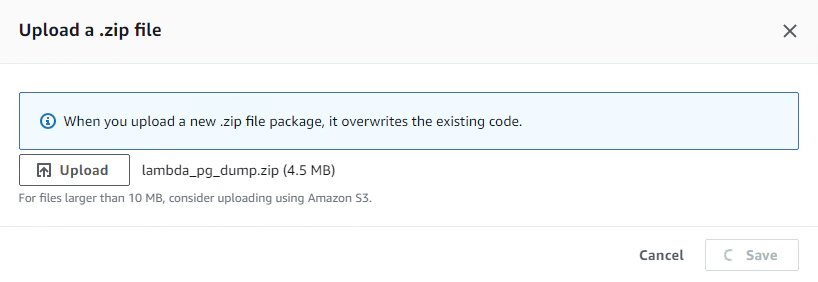

After creation, under the Code panel, we’re going to upload our source bundle. Luckily, our function package is only 4.5 MB so we can directly upload it here.

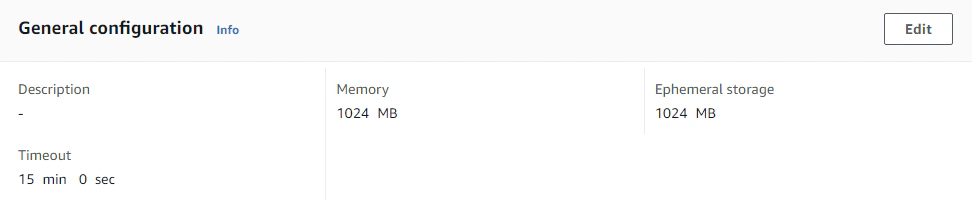

Lastly, we need to configure our memory, storage and timeout depending on our expected size of the database that we are going to create a dump for. But for this exercise, we could set the memory to 1024 MB and ephemeral storage to 1024 MB as well, the most important thing to configure here is our timeout. We need to give our function ample time to dump our database, so it wouldn’t hurt to set this to a longer time like 10 or 15 minutes even.

As best practice, we should never store database credentials in the application code, and while we can pass credentials in the event JSON parameter, this seems like a questionable security practice to me. A more secure way of doing this is by putting the credentials inside the function’s environment variables, as this gives improved security and the benefit of KMS encryption.

So the best and most secure way to do this is by utilizing AWS Secrets Manager. As its name suggests, it provides applications a better way to protect secrets like database credentials by providing features for management, retrieval and automatic rotation of secret values.

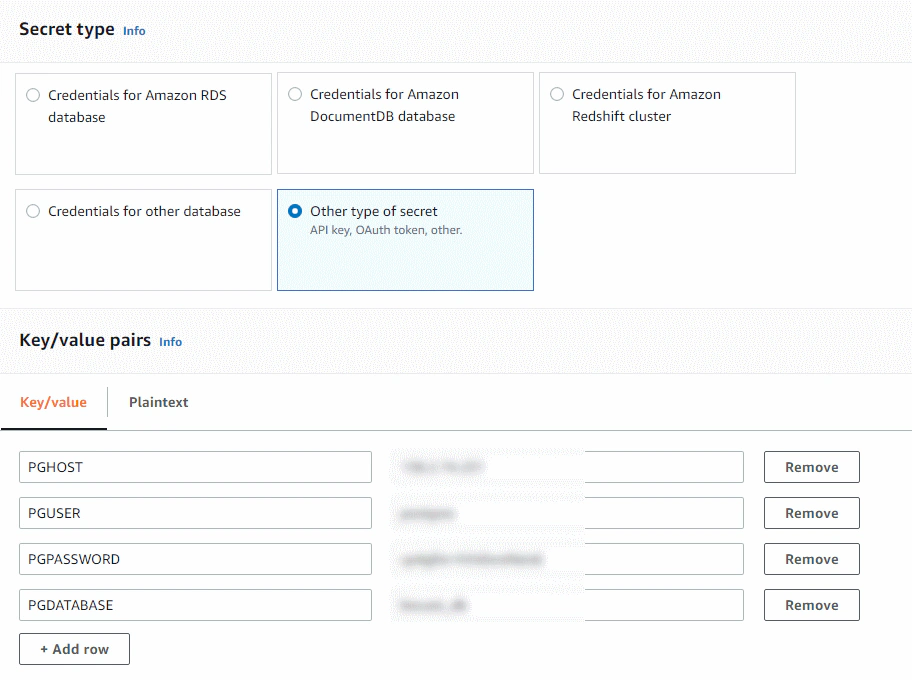

To create a secret, we need to go the AWS console and go to the Secrets Manager service. We’ll create a key/value pair secret and populate the values with the ones required by our application.

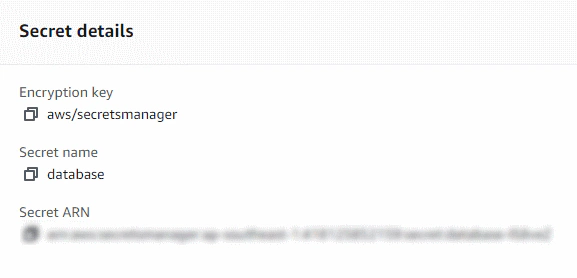

We can optionally configure it to use a different encryption key but we’ll leave everything as default for now. Then we just need add a name to it such as “database_secret”, and hit Store to save our secret.

We need to take note of the secret arn or name as we’re going to use and pass this value to our Lambda function for the application to reference and utilize the database credentials.

Our Lambda application accepts the following event JSON parameter.

1{

2 "SECRET": "<secret arn or name>",

3 "REGION": "<aws region>",

4 "S3_BUCKET": "<s3 bucket name>",

5 "PREFIX": "<optional prefix/folder in s3>"

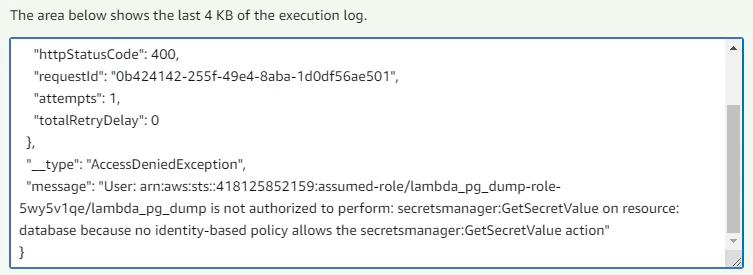

6}After executing the Lambda with this test event, you might find an error message like below:

The error message is caused our Lambda function not having enough permission to retrieve the database credentials stored as secrets from AWS Secrets Manager. To remedy this, we must attach another permission policy (can be inline) to our Lambda execution role.

1{

2 "Version": "2012-10-17",

3 "Statement": [

4 {

5 "Effect": "Allow",

6 "Action": [

7 "s3:PutObject",

8 "s3:PutObjectAcl"

9 ],

10 "Resource": "arn:aws:s3:::rcordial/*"

11 },

12 {

13 "Effect": "Allow",

14 "Action": "secretsmanager:GetSecretValue",

15 "Resource": "arn:aws:secretsmanager:<aws-region>:<account-id>:secret:database-XXXX"

16 }

17 ]

18}The JSON document above is a policy that we need to attach to our Lambda execution role to give it permission to retrieve secret values from AWS Secrets Manager, similarly we also need to give it permission to put objects to our S3 bucket. Our Lambda function should now be able execute successfully.

To automatically invoke our Lambda function, can use another AWS service called Amazon EventBridge, more specifically the Scheduler capability. It will allow us to deliver events to targets like our lambda function and pass relevant event data (like the credentials in secrets manager) to create a scheduled way of repeatedly invoking our lambda function.

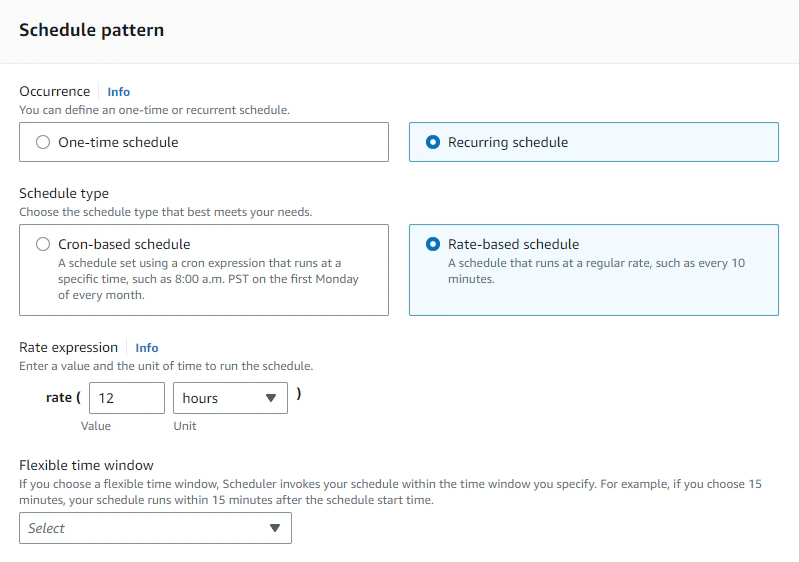

We can create by heading to EventBridge Scheduler and creating a schedule by giving it a name and specifying the occurence and schedule type like below:

In the example above, we are specifying that we want to invoke our Lambda function every 12 hours. Furthermore we can optionally add a timezone and a start date and time.

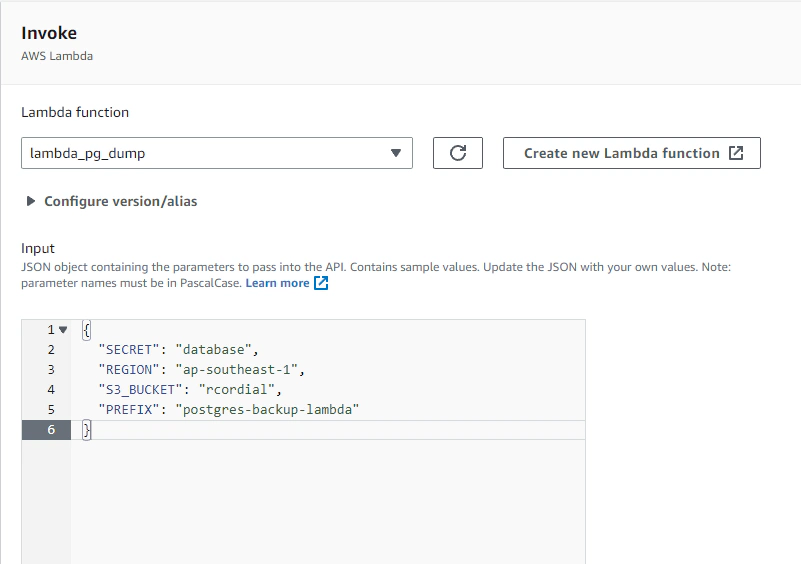

Then we can specify the target, which in this case is a Lambda function. We just need to pass the same JSON event parameter that EventBridge will pass upon invoking our Lambda function.

If everything is followed up to this point, then we have now successfully created a Lambda function that runs on a specified schedule to create a database dump from a PostgreSQL database.

- We deployed a Lambda function with the pg_dump utility installed

- Created a Secrets Manager secret to securely store sensitive database credentials

- Automate function invocation by setting up an EventBridge Scheduler

- Implemented the whole thing in serverless again, no server-run CRON jobs, and no idle compute resulting in another negligible AWS bill

Here is a stock photo of Octocat and Groot I found, cause GitHub and S3 (S-Tree, get it? no? okay). S3 stands for Simple Storage Service, as its name …

Database versioning allows teams to maintain consistency within environments and encourage collaboration by enabling multiple developers to work on …